Introduction: A Breakthrough in AI Infrastructure

China has officially activated what is considered the world's largest distributed AI computing pool. This new infrastructure, named the Future Network Test Facility (FNTF), promises to revolutionize how artificial intelligence models are trained by overcoming the physical limitations of single data centers through an ultra-high-speed optical network. Developed over more than a decade, the project aims to unify computing resources scattered across the country into a single cohesive system.

Context: The FNTF Project

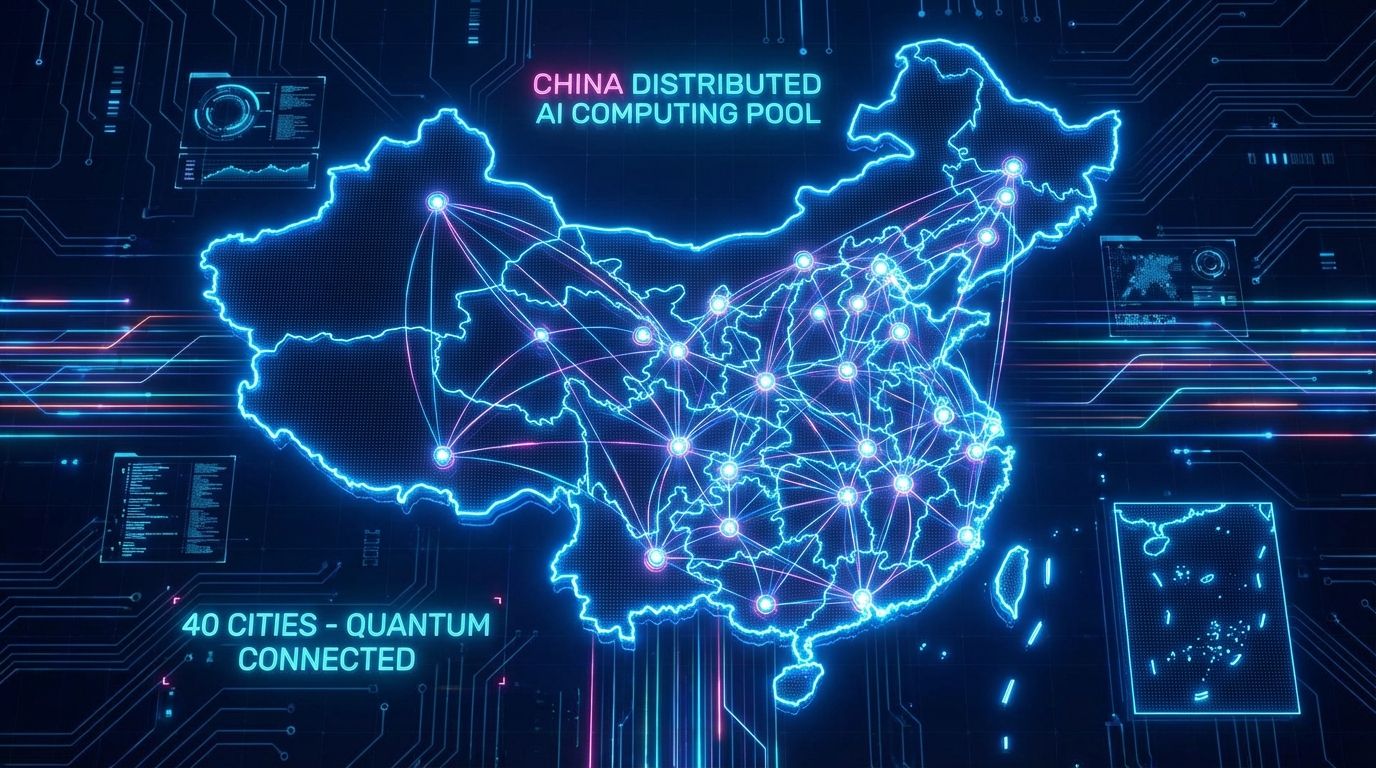

The FNTF represents China's first major national infrastructure project in the information and communication sector. Operational since December 3, the system connects distant computing centers through a network spanning over 2,000 km. The scale is impressive: the total optical transmission length exceeds 55,000 km, enough to circle the equator 1.5 times. This network covers 40 cities and can simultaneously support 128 heterogeneous networks.

For further technical and geopolitical details on this topic, you can refer to the report by the South China Morning Post.

"The 2,000km-wide computing power pool formed via this network could achieve 98% of efficiency of a single data center."

Liu Yunjie, Member of Chinese Academy of Engineering

The Challenge: Latency and Coordination

The fundamental problem of distributed computing has always been latency. When connecting multiple computing centers, the time required to coordinate data usually results in an efficiency loss (overhead) of 20% to 40%. This delay is critical in training advanced AI models. The US approach, adopted by giants like Google or Meta (with the Colossus cluster), prioritizes concentrating GPUs in a single location to minimize latency, albeit accepting risks related to power consumption and geographic concentration.

Solution: High-Speed Deterministic Networks

The Chinese solution relies on a deterministic network that drastically reduces latency, allowing the distributed AI computing pool to operate almost as a single entity.

- Record Efficiency: The system claims 98% efficiency compared to a single data center, nearly eliminating the latency bottleneck.

- Iteration Speed: Training an AI model with hundreds of billions of parameters requires over 500,000 iterations. On the FNTF network, each iteration takes about 16 seconds, compared to the 36+ seconds (20 seconds longer) that would be needed without this capability. This translates to months saved on the total training cycle.

- Data Transfer: During the launch, a 72-terabyte data set generated by the FAST telescope was transmitted across 1,000 km in just 1.6 hours. On the regular internet, the same transfer would have taken about 699 days.

Conclusion

If the 98% efficiency data holds at scale, China may have found a winning strategy to compete in AI despite restrictions on advanced chips. While the US focuses on centralized brute force, China bets on resilience and geographic distribution, transforming the network itself into a supercomputer.

Distributed AI Computing FAQ

What is the FNTF distributed AI computing pool?

It is a Chinese infrastructure connecting computing centers in 40 cities via 55,000 km of optical fiber, enabling them to work as a single computer for AI training.

What are the advantages of this distributed AI computing pool over traditional systems?

It offers 98% efficiency comparable to a single data center, drastically reducing latency and saving months in AI model training cycles.

How fast is data transfer on the new Chinese network?

The network demonstrated it can transfer 72 terabytes of data in 1.6 hours, a task that would take almost two years (699 days) on a standard internet connection.

How does the Chinese approach differ from the US one?

The US centralizes GPUs in massive single data centers (low latency but high local power usage), while China distributes computing across 2,000 km using advanced networks to coordinate resources.