Introduction

Amazon has announced the development of AI-powered smart glasses designed for its delivery drivers. These devices represent a significant step toward logistics automation, enabling drivers to deliver packages more efficiently and safely without constantly relying on their smartphones.

How Amazon's AI Smart Glasses Work

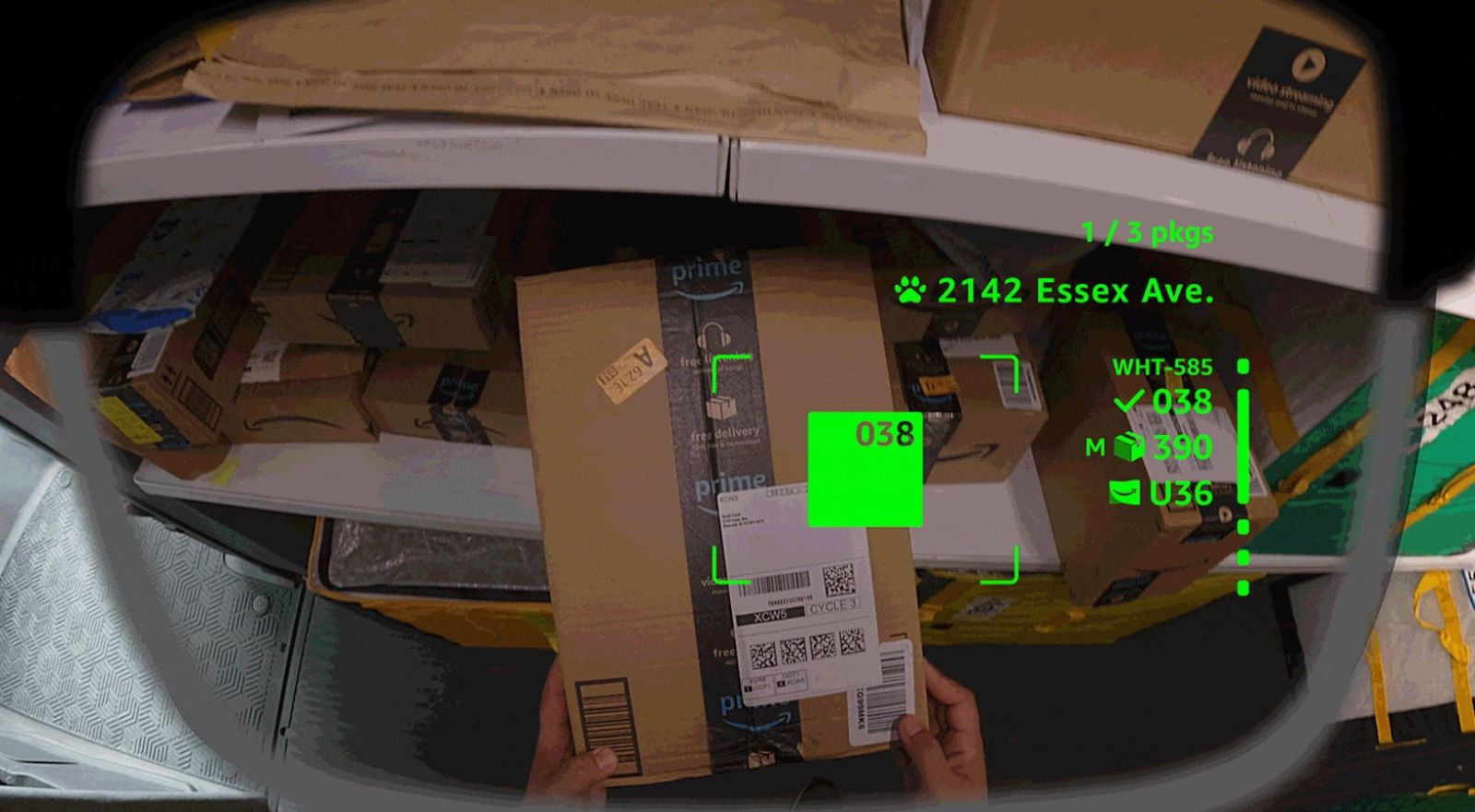

Amazon's smart glasses combine AI-powered sensors and computer vision to provide drivers with a completely hands-free experience. Drivers can scan packages, follow turn-by-turn directions, and capture proof of delivery directly in their field of view, without touching their phones.

The device activates automatically when the driver arrives at a delivery location. The glasses guide the driver to locate the package in the vehicle and then navigate to the delivery address. They are particularly useful in multi-unit apartment complexes and commercial buildings, where detailed directions reduce confusion and delays.

Key Features

- Hands-free GPS navigation: Turn-by-turn directions displayed directly in the field of view

- Package scanning: Reading barcodes without using a smartphone

- Hazard detection: Sensors alert drivers to obstacles and low-light conditions

- Proof of delivery: Automatic capture of delivery confirmation

- Prescription lens support: Compatible with corrective lenses and transitional lenses that automatically adjust to light

Design and Components

The glasses are paired with a controller worn in the driver's work vest. This controller contains operational controls, a swappable battery, and a dedicated emergency button. The modular design allows drivers to use the device throughout their entire shift without interruption.

Future Capabilities

Amazon plans to expand the glasses' capabilities in the near future. The platform will provide real-time defect detection, immediately notifying drivers if they accidentally deliver a package to the wrong address. Additionally, the glasses will be able to detect pets in yards and automatically adjust to low-light conditions.

Testing Phase and Rollout

Amazon is currently trialing the smart glasses with delivery drivers in North America. The company intends to refine the technology further before a wider rollout. This trial phase is crucial for gathering real-world feedback from drivers and optimizing device usability across diverse operational scenarios.

An AI Ecosystem for Logistics

The announcement of smart glasses is accompanied by other AI innovations from Amazon. The e-commerce giant also introduced Blue Jay, a robotic arm that works alongside warehouse employees to pick items from shelves and sort them. Additionally, Amazon launched Eluna, an AI tool that provides real-time operational insights in Amazon warehouses.

Together, these three innovations—smart glasses, robotics, and analytical software—represent a coherent strategy for transforming Amazon's supply chain operations.

Impact on Delivery and Safety

Amazon's primary goal is to reduce delivery time by providing drivers with detailed information and directions directly in their field of view. By reducing the need to check smartphones, the glasses enhance situational awareness and driver safety, reducing accident risk. Additionally, precise indoor navigation in complex environments like apartment buildings and shopping centers significantly accelerates the delivery process.

Historical Context

The announcement is not surprising to the industry: Reuters previously reported that Amazon was developing smart glasses. This move aligns with a broader industry trend toward wearable devices and augmented reality for professional applications.

FAQ

What exactly is the AI assistant in Amazon's smart glasses?

The artificial intelligence in the glasses uses computer vision and sensors to analyze the environment, provide directions, detect hazards, and manage package inventory in real-time, reducing reliance on the driver's smartphone.

When will AI smart glasses be available to all delivery drivers?

Amazon is currently conducting trials with drivers in North America. The company has not provided a specific commercial launch date, but plans to refine the technology before a global expansion.

How do AI smart glasses improve driver safety?

The glasses reduce the need to check smartphones while driving and making deliveries, allowing drivers to remain aware of their surroundings, and automatically warn of obstacles and low-light conditions, reducing accident risk.

Do the AI glasses support prescription lenses?

Yes, Amazon's glasses support prescription lenses and progressive lenses that automatically adapt to lighting conditions, ensuring comfort for all drivers regardless of their vision needs.

What is the role of the controller worn in the vest?

The controller contains operational controls, a swappable battery to ensure autonomy during shifts, and an emergency button for critical situations.

Can the glasses detect hazards beyond simple obstacles?

Yes, future versions will detect pets in yards, prevent wrong-address deliveries through location sensors, and automatically adjust to critical environmental conditions like low-light situations.